A robots.txt file enables you to block specific automated bots and web spiders that crawl the Web in order to find more web pages to add to their company’s servers. Some bots you want to have full access to your website, such as Google’s or Yahoo!’s, and some others you would rather not, such as spambots, content thieves, e-mail collectors, etc.

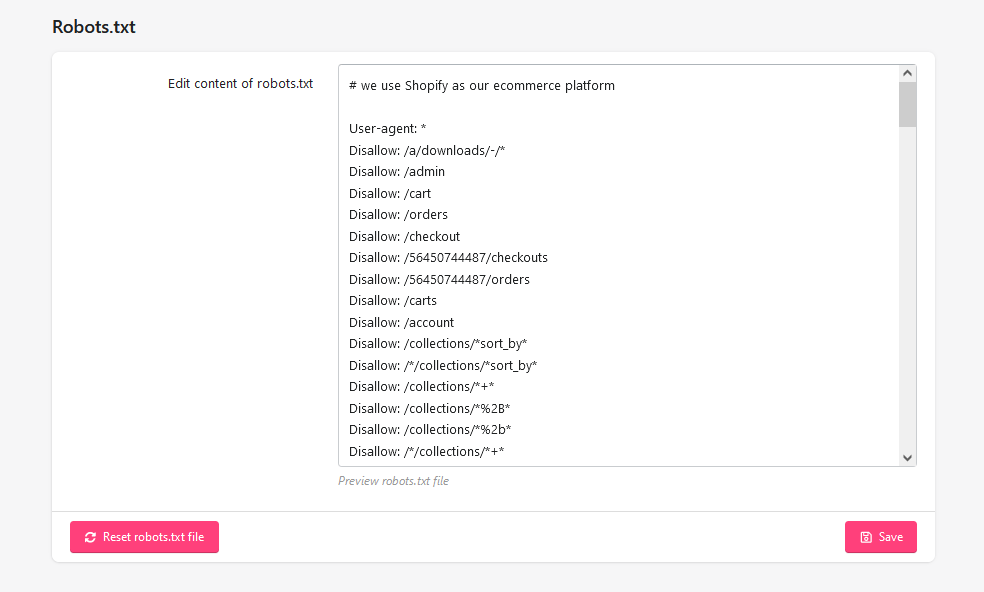

SEO Audit’s robots.txt generation tool simply creates a file with exclusion directives for files and directories that are not meant to be public, and should not be indexed. You can edit the content of robots.txt file or restore it to the default configuration.